How Website Crawler Helps Website Owners Increase Traffic To The Site?

Take a look at all the ways website crawlers help you boost your site’s traffic.

#1

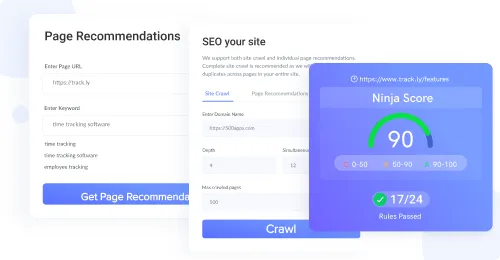

Audit, optimize websites, build links and grade webpages

#2

Get a detailed report of backlinks from a frequently updated database of 3.2B inlinks

#3

Generate keywords and LSI based on Google keywords search tool

#4

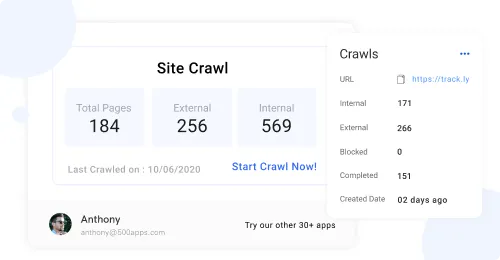

Generate the site crawl map to get the hierarchical structure of all the web pages and links

#5

Crawl webpages, find score & get recommendations using powerful extension

#6

Identify authors details based on specific keywords & automate outreach activity using links bot

#7

Foster Link Building Proces

#8

Instant Sitemap Generation

#9

Unbelievable pricing - the lowest you will ever find

#10

Everything your business needs - 50 apps, 24/5 support and 99.95% uptime

A Web crawler, which is sometimes called a spider or spiderbot, and often shortened to crawler, is an internet bot that systematically browses the world wide Web, typically for web indexing.

To explain it more simply, a web crawler is a program that sources documents from websites. This is the basics of the search engines — to build an index, Google, Yahoo, and other search engines use crawlers to surf the Internet in an automated version.

Apart from sourcing documents from the web, other crawlers search for email addresses, RRS feeds, and others.

Web search engines like Google, Yahoo, and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine; the copied web pages would then be added to the index so that users can search more efficiently.

How Website Crawlers Work

Gadget gram explains in this Tweet That ""Web crawlers are used by search engines to explore the web in order to help internet users find websites.""

Tianna Has also further explains the step by step process of crawling, indexing and all the other processes involved in the operations of web crawlers Here.

Search engines use spiders (also known as web crawlers) to explore the web, not spin their own.

A web crawler copies webpages so that search engines can process them later, which indexes the downloaded pages. This indexing allows users of the search engine to find webpages quickly. The web crawler also validates links and HTML code, and sometimes it extracts other information from the website.

Put, web crawlers browse through all available information on the net, groups them into categories for indexing and cataloging so that the assembled information can be assessed and evaluated quickly.

So here are the ways web crawlers work as well as how website crawlers help online businesses:

Excavating URLs: one of the things web crawlers do is to discover URLs. There are three ways web crawlers discover URLs — the first is the webpages the web crawler has searched in the past, the second is by crawling a web link from a webpage it has already crawled, while the last method is by crawling a URL when the website owner drops a sitemap for search engines to crawl. Meanwhile, a sitemap is a file with information about the pages on a particular website. Web crawlers will easily crawl a website when there is a clear sitemap, and when the website is also easy to navigate.

Exploring many seeds: after discovering many URLs, the next thing web crawlers do is to check out all the addresses (URLs, also known as seeds) the search engine provides. The web crawler would visit each URL, copy all the links on each page, and add them to the banks of URLs to explore. By now, the web crawlers use sitemaps and links discovered during previous searches to determine the next URLs to search and explore.

Adding to the index: web crawlers explore the seeds on the lists to get the contents and add them to the index — where search engines store all the information and knowledge. The search engines also store not only text documents that are stored in the index, images, videos, and other files. The storage size is above 100 million gigabytes!

Updating the index: web crawlers do more than searching the web; they also monitor content keywords, the uniqueness of the contents, and other key signals to have a full picture of each webpage. Google's explanation about the web crawler is that “The software pays special attention to new sites, changes to existing sites, and dead links.” These activities would make the software updates its search index and keep up to date.

Crawling Frequency: The web crawlers do not sleep and are there crawling the internet continuously. To understand how often they crawl each webpage, Google said, “Computer programs determine which sites to crawl, how often, and how many pages to fetch from each site.” Therefore, what determines how often web crawlers search your web pages are the perceived importance of your page, your website crawl demands, recent changes you have made on your website, and the interest level of searchers and Google on your website. Therefore, a web crawler will crawl your web often if it's popular so that your subscribers and audience can get the newest contents of your websites.

Blocking Web Crawlers

You may decide to keep some pages secret from search engine result pages (SERPs) on your website to prevent sensitive, irrelevant, and unnecessary pages from appearing in the search engines.

You may also decide to block web crawlers from adding your website to their index in a 'Do not enter' signal. There are ways to do this using robots.txt file, by having an HTTP header that codes that your page doesn't exist, or by using no index meta tag. These three ways would prevent crawling on your website. Also, webmasters sometimes block web crawlers from accessing a page. That is why you need to examine your webpage's access to crawling to know if web crawlers could access it.

Using Robots.txt Protocols to Block Web Crawlers

When you decide to block the web crawler from accessing your web, webmasters use robots.txt file.

Webmasters utilize robots.txt protocol to make their webpages accessible or not to web crawlers. You can also include other myriads of things in your robots.txt file, e.g., you can choose which page a bot can crawl, which links a crawler can follow, or you may choose to prevent a bot from accessing your web altogether. This is made easy by Google that provides the same tools to all webmasters without any special license or bribing.

Using no-index meta tag to block web crawlers Noindex meta tag is another or perhaps the first roadblock for web crawlers. This No index meta tag prevents the search engines from indexing a particular page. The pages you would like to use the no-index meta tag for are admin pages, internal search results, thank you page, and other similar pages.

Improve Search Engine Rankings with All-in-One SEO Tool? Sign Up 14 Day Trial

Why Do You Need Website Crawlers?

Website crawlers play a significant role in how high your website ranks on Search engine result pages (SERPs). Easier readability and reachability are vital for SEO although how website crawlers help businesses might be difficult to understand for some people.

In straightforward terms, web crawler behavior helps webpages to appear faster and higher in SERPS, thus improving user experience because SEO puts other factors into consideration asides from crawling.

There are many things a web crawler can do and how it can boost your website; among them are:-

Search Engine Optimization: for your website to be readable and reachable to your audience and subscribers, you need web crawlers to improve your website rankings. Crawling will help the search engines to check your page, and subsequent and regular crawling will make the search engines to display your changes and keep up to date. Crawling is a beautiful measure to keep appearing in searches and increase the bonds with your users. This is a major way how website crawlers help your online business.

E-commerce benefit : you can use web crawling to get product information, get products or service ads, scrape your competitor's social media channel, and predict the version trend from your competitors.

To create applications for tools with no public developer API:- web crawling allows you to access websites by boycotting public application programming interface (API), which webpages use to prevent others from accessing a particular page. The many ways web crawling is better than a public API are 1. You have access to any info or data on their website. 2. There is no limit to the number of queries 3. You need not sign up for any API key or follow their rules.

Effective data management : web crawling saves you from the hassle of copying and pasting unnecessary data from the internet. Bot crawling gives you the needed freedom to choose which data to collect. Also, for sophisticated web crawling techniques, you can store your data in a cloud database running daily.

Effective data storing: web crawling allows adequate storage of data with automatic programs or tools. This data storing means your workers, company, or anyone with access will spend less time copying and pasting data to focus on other creative works.

How Do Web Crawlers Actually Help to Generate Leads and Sales?

Many ways web crawlers would boost your business by generating leads and sales for you are endless. But here, we would go through how website crawlers help your business from listing the core aspects of bot sprawling to your lead generations and sales.

When a visitor visits your website, there are two convincing things they want right away:

- The first is to know that they are in the place they desired

- To quickly find what they are looking for on your website.

It is noticeable that this is what search engines also want for those visiting our websites, and now, they are better at determining how well our contents serve the interest of our audiences.

What web crawlers do is to take what they have searched and learned on different pages and bring it back to the search engines. After, they add new information about the site to the search engine's master map. This is process is what search engines will use to index and rank the contents of websites according to the number of different factors.

An opinion on this, Adam Audette, an expert explained that ""Today, it is not just about 'get the traffic', - it's about 'get the targeted and relevant traffic'. Web crawlers help to go after the targeted customers and audience to generate leads and sales.

This Is Exactly How Web Crawlers Help Businesses

To optimize your price: you may find it difficult to set a price for your particular product, so a web scraping will come in handy here. First is that web crawling will get customer's information and understand how you can satisfy their needs by realigning your market strategy. The other way is to stay in touch with the changes of price in the market so that you don't go too high or too low on your pricing.

Lead generation: web crawling will make you research on your targeted audience and set your market persona e.g education, job titles and others. Also, web crawling will help you to find the relevant websites in your niche.

Investment decision: taking an investment decision is ambiguous and it involves a series of researches and processes before taking a decision. However, web crawlers will help you to extract the necessary historical information about previous success, failures, and pitfalls you should avoid and note. This historic data analysis is what big investors and companies use to make decisions and they do this by extracting the necessary data on websites using web crawlers.

Product optimization: this is a beautiful thing to do if you want to launch a new product or improve the existing ones. What you need to do is to use web crawlers to get the product reviews as it has a great impact on customer's buying decision. Therefore, before launching a new product, you need to know the preference of customers and their attitude towards the product by analyzing the customers' behaviors, and you do this by getting enough data through web crawling.

Conclusion

When a search engine crawler reviews your site, they're doing so in much the same way as a user. If it's difficult to parse data correctly, you're setting yourself up for poorer rankings. With a solid understanding of the underlying technology and protocols these crawlers follow, you're able to optimize your site for better ranking potential from the get-go.